Why This Text?

Nowadays, a lot of content is produced using generative AI. Social media posts in platforms like LinkedIn are created by ChatGPT, with comments originating from Gemini. Marketing people are using AI creation tools to stay productive even late in the evening. And decision makers ask copilot how to decide. Where to invest, whom to fire? Are they doing right? Is AI able to answer their questions honestly?

We all know AI definitely can create stunning images and text – faster and often better than humans. What about decision making? What about reasoning? Where are the limits? Are there limits? In the sequel I want to give an overview of recent work related to this question. It’s a collection, not a complete survey. To make reading easier, I ordered the sources according to increasing difficulty to read. I want to encourage the reader to not just rely on my excerpts, but follow the links and read (at least partially) the original work. Two of the references are in German – you may want to trust ChatGPT for a translation, do you?

In short: As teaser for this article I asked ChatGPT to draw an image of her/his/its eyes, if he had ones. Look at these eyes. Can these eyes lie?

The Playful Approach

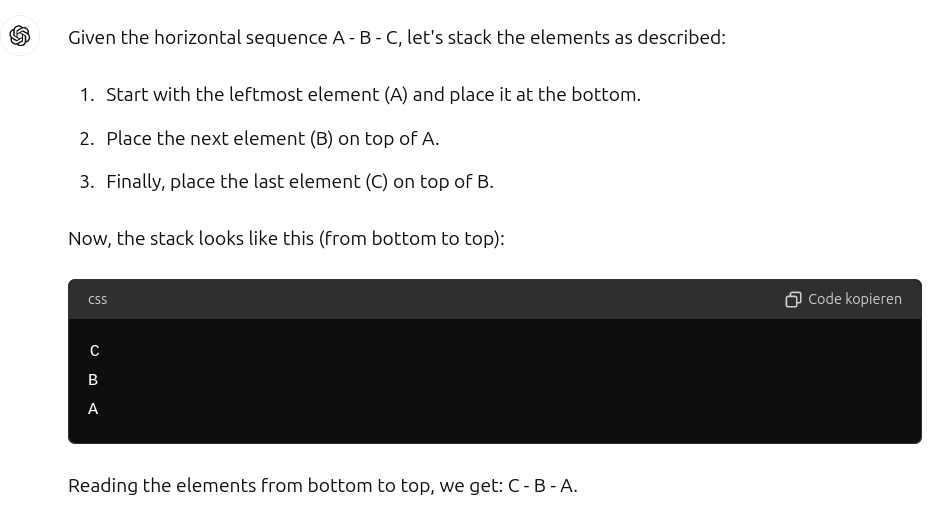

This approach uses fairly simple tasks that can be solved by logical reasoning. Fairly simple in the sense that an average human won’t have any problems to find the correct answer. Questions are as easy as:

Given a horizontal sequence A - B - C. Now stack those three elements by picking the leftmost one first, then placing the next one on top, and so on. Now read the elements from bottom to top.

Sorry, failed. In this simple form, this kind of experiments is well described by

Joe Diercks: „Ist KI “intelligent”? Kann ChatGPT logisch schlussfolgern? Ein einfaches, aber sehr aufschlussreiches Experiment…“ (Can ChatGPT do logical reasoning? A simple, but conclusive experiment), Jan. 2024, LinkedIn

A more complete treatment of the logical reasoning poblems with LLMs appeared in June 2024:

M. Nezhurina, L. Cipolina-Kun, M. Cherti, J. Jitsev: Alice in Wonderland: Simple Tasks Showing Complete Reasoning Breakdown in State-Of-the-Art Large Language Models. June 2024, available at arXiv.org

Not really necessary to give a summary here, the title in itself is clear: „… showing complete breakdown …“.

The Scientific Question

In the previous section we challenged AI with – at least for us humans – simple questions. You may call this a bottom-up approach: start with the easy tasks, then let’s see further. Stephen Wolfram took the opposite path and asked the master question: Can AI science? However, Wolfram’s approach is way more general and not focused on LLMs:

Stephen Wolfram: Can AI Solve Science? Available at Wolfram Research

In most of his experiments, Wolfram uses AI to predict the behavior of cellular automata. It turns out that AI is a useful tool to produce fairly good results, but not precise ones. In addition – as well-known from LLMs – AI has a significant tendency to hallucinate. Definitely not what you want to have in science.

High-Level Point of View

The work discussed so far used examples to illuminate the shortcomings of certain AI methods in solving certain classes of problems. Thinking further, one might be tempted to ask a more general question: Are there fundamental boundaries AI (as we understand and can build it today) cannot get beyond? Do there exist fundamental laws like the speed of light, like the bounds on the performance of error-correcting codes in information theory, like the law of conservation of energy? It seems there are. Gitta Kutyniok gives a well-readable overview over current work regarding natural limits for AI:

G. Kutyniok, „Ein mathematischer Blick auf zuverlässige künstliche Intelligenz“ (A mathematical view of reliable artificial intelligence), ITG-Newsletter, April 2024. Available online, starting from page 6

The two key takeaways from her article are:

- Given our current implementations of AI, for wide classes of problems there do exist bounds in precision. This essentially means, regardless of the effort you spend on complexity, training or tuning, you will not overcome a certain error level.

- For a certain, important class of problems, our current AI is fundamentally unable to solve them.

The latter remark is related to inverse problems. They describe the task to reconstruct original data from measurements. Obviously, this is a very frequent problem in everyday technology today. Machines that can be described as Turing machines are by principle unable to solve this problem:

Boche, H., Fono, A., & Kutyniok, G. (2023). Limitations of Deep Learning for Inverse Problems on Digital Hardware. IEEE Transactions on Information Theory, 69(12), 7887-7908. https://doi.org/10.1109/TIT.2023.3326879

An earlier version of the work seems to be accessible at arXiv.

Conclusion

To answer our initial question: Can these eyes lie? Yes, the can, and they do. The capabilities of AI are fundamentally limited. Limited in the precision of estimates, limited in the ability to do logical reasoning, and limited to certain classes of problems.